Revolutionizing Image Processing with Advanced Vision Science

Revolutionizing Image Processing with Advanced Vision Science

Don't worry there is more in deep info below if you want to learn more!

TONEO Prime is a plug-in compatible with Premiere Pro for Mac that will save you a lot of color grading time.

Soon, we will roll out Premiere Windows, Final Cut Pro, and DaVinci Resolve versions; watch this space.

The Challenge of Traditional Color Grading

In cinema postproduction, the initial step, known as the technical grade, aims to ensure that the on-screen image closely resembles what an observer would perceive in the real-world scene. This process has traditionally been manual, relying on the expertise of colorists who adjust colors, brightness, and contrast to achieve a natural look. Automated methods have struggled to meet the high standards of the cinema industry because they lack the adaptability of human vision.

How Toneo Overcomes These Challenges

Toneo is designed to address the limitations of traditional automated methods. It is fully image-adaptive, meaning it adjusts the image based on the full statistics of what the camera captures, emulating the human visual system’s ability to adapt to varying conditions. Developed through years of research in vision science and optimized by cinema professionals, Toneo produces images that are ready for display with the same detail, contrast, and color accuracy that a skilled colorist would achieve manually.

TONEO Prime's human vision color model analyses your shot and instantly calculates the settings for a polished, faithful representation of the scene with natural colours, contrast and beautifully-preserved skin tones. From there, you can punch it up or dial it back in a matter of seconds.

Why Toneo Stands Out

What is TONEO, long version for geeks!

The technical grade is always a manual process, performed by skilled artists/technicians

In cinema postproduction the first pass of the color grade, called technical grade, aims to modify images so that their appearance on screen matches what the real-world scene would looklike to an observer in it, i.e. the image appearance is made to look as natural as possible [1].

This process is performed manually by the colorist: the cinema industry does not rely on automatic methods and resorts instead to challenging work by very skilled technicians and color artists.These practices can be explained by the fact that the cinema industry has almost impossibly highimage quality standards, beyond the reach of all automated methods.

Furthermore, cinema artists complain that the software tools they work with are based on very basic vision science; for instance,noted cinematographer Steve Yedlin says that: “Color grading tools in their current state are simply too clunky [...]. Though color grading may seem complex given the vast number of buttons, knobsand switches on the control surface, that is only the user interface: the underlying math that thesoftware uses to transform the image is too primitive and simple to achieve the type of richtransformations [...] that define the core of the complex perceptual look”.

Why? Because vision is highly adaptive, while automatic methods are not

The results of automatic methods are not good enough for the extremely high quality standards of the cinema industry because these methods are rooted on vision models from the 20th century, that do not adapt to the image, while vision is highly adaptive.

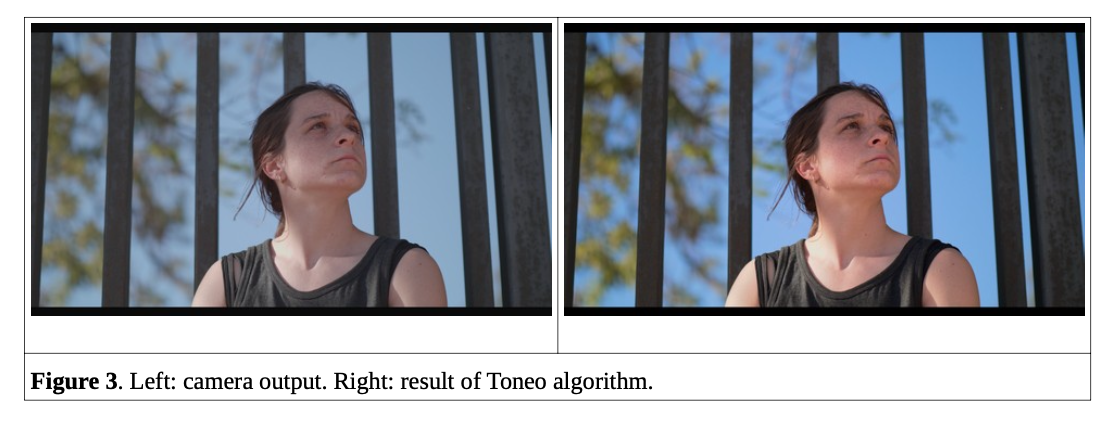

Adaptation is an essential feature of the neural systems of all species [2] that constantly adjusts thesensitivity of the visual system taking into account multiple aspects of the statistics of the input stimulus. This is done via processes that aren’t fully understood and contribute to make human vision so hard to emulate with devices [3]. The way we perceive brightness and color is, through adaptation, heavily dependent on the statistics of the whole field of view image we are seeing, and changes very rapidly with the image itself. As a consequence, if we are outdoors at daytime and look at the scene that surrounds as, its appearance will be different in terms of colors, brightness and contrast with respect to the appearance of a picture of this scene that we look at on a screen, indoors in a dim environment. We need to compensate for adaptation when we show the picture on the screen, and this is what colorists do manually, and what automatic methods can’t do properly: automatic methods are either(1) based on classic vision models, which are not adaptive but image independent and have been developed using synthetic images; or (2) they are AI-based, in which case the results often showcolor and contrast issues, that in general take substantial time to fix. The bottom line is that the correct, perceptually faithful presentation of an image on a screen requires an accurate image-adaptive method that takes into account the full image statistics, that hasbeen developed and tested with natural, not synthetic, images, and that is able to provide results that cinema professionals can approve. This is where Toneo comes in.

TONEO: fully image-adaptive, best quality results

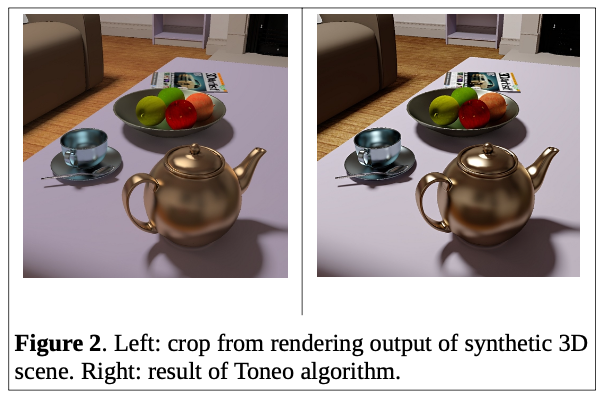

Toneo takes a RAW image directly from the sensor, and automatically produces a natural-looking result with optimal appearance that is ready for display: shadows, midtones, highlights, skintones, contrast and color in general, everything in the resulting image matches accurately the perceptionthat someone present in the scene would have had.Toneo is based on vision science from the 21st century, is fully adaptive, has been developed with cinema footage, and cinema professionals have optimized its parameters. The scientific underpinnings of our technology are the result of several years of academic researchin a top European university, and are presented in a paper [4] and a book [5]. The research was carried out simultaneously on three main fronts: creating images with the same level of contrast as humans can perceive, adapting the contrast and colors appearing in a movie to the capabilities of the display being used, and developing a method to evaluate how good is the match, in terms of perception, between the image that is displayed and the image that can be seen at the shooting scene. A significant, combined outcome from these three research lines has been to propose a vision model for contrast perception that can be realized as an image-dependent non linear transformation that can accurately reproduce the detail and contrast visible in the original scene.

The model is based on findings from psychophysical and neurophysiological studies and is well suited to the statistics of natural images. The resulting transform is automatic (no need for user-selected parameters) and of extremely low computational complexity, making it a good candidate for real-time applications. It produces images and videos that look natural, without any halos, spurious colors or artifacts. A European patent has been granted. The visual quality of the results, as validated by cinema professionals, is very high, outperforming the most widely used industrial methods as well as surpassing the state-of-the-art in the academic literature. The advantage of our technology in terms of image quality implies a better emulation of visual perception, which comes from incorporating into our algorithm a number of properties that have been observed in neural response data and that visibly impact the results. But the core of our method isn’t just the accurate modeling of retinal processes: the contribution ofcinema professionals like cinematographers and colorists has been essential in order to properly adjust and fine-tune our framework. Colorists are capable of adjusting the appearance of images so that, when we look at them on a screen, our subjective impression matches what we would perceive with the naked eye at the real-world scene, and skilled colorists are able to achieve what state-of-the-art automated methods cannot because the manual techniques of cinema professionals seem to have a “built-in” vision model. Therefore, by optimizing Toneo one could argue that the cinema artists have been optimizing a vision model, simple as it might be. And very often the results of Toneo are surprisingly close to those of a manual grade by a skilled colorist.

Our method is very robust, with a performance in terms of image quality that is consistently excellent. A key to our success in this regard lies in the fact that Toneo does not rely on artificial neural networks, which have been shown to have very significant limitations in modeling visualperception [6] and do not generalize well even when using huge amounts of training data [7,8], i.e. their performance decays considerably when they are applied to images that are different from the ones used for training the network.

Other domains where Toneo could be applied

The contrast in a scene is measured by the ratio, called dynamic range, of light intensity values between its brightest and darkest points. While common natural scenes may have a contrast of 1,000,000:1 or more, our visual system allows us to perceive contrasts of roughly 10,000:1, while the vast majority of displays, including digital cinema projectors, have a limited contrast capability in the order of 1,000:1 and below.

It is not uncommon for camera sensors to have a dynamic range of 3 or 4 orders of magnitude, therefore matching in theory the range of human visual perception, but because of the constrained range of displays the image signals captured by sensors have to be non-linearly transformed so that their contrast is reduced, at the same time trying to maintain the natural appearance and visibility ofdetails of the original. All cameras perform this transformation when they record a photo or video in any standard format; in professional situations cameras are often set to output images as they come out of the sensor, in a format that isn't ready for display but 'raw', so that the non-linear transform can be manually performed by a skilled technician/artist later in post-production. But be it in-camera or off-line, the application of this transform process that compresses the dynamic range is required, if the image is to be displayed.

The same situation happens for computer generated images: in videogames as in architectural design or 3D animation, physically realistic models oflight-object interactions are used to render an image whose dynamic range has to be reduced forvisualization on a regular screen. Given that Toneo solves this very same problem for the case of cinema postproduction, where the quality standards are the highest, the potential of our technology is very clear for other scenarios such as image and video capture and display, and rendering in computer graphics, with applications such as on-chip implementation on camera, on-set monitorization for professional shoots, formatconversion in HDR TV broadcast, HDR streaming or HDR projection, image enhancement forvirtual reality headsets, or perceptually accurate visualization for architectural design, to name just afew.

References

[1] A. Van Hurkman, Color correction handbook: professional techniques for video and cinema. Pearson Education,2013.

[2] B. Wark, A. Fairhall, and F. Rieke, “Timescales of inference in visual adaptation,” Neuron, vol. 61, no. 5, 2009.

[3] F. A. Dunn and F. Rieke, “The impact of photoreceptor noise on retinal gain controls,” Current opinion inneurobiology, vol. 16, no. 4, pp. 363–370, 2006.

[4] Cyriac P, Canham T, Kane D, Bertalmío M. Vision models fine-tuned by cinema professionals for High DynamicRange imaging in movies. Multimedia Tools and Applications. 2021 Jan; 80(2):2537-63.

[5] Bertalmío, M., 2019. Vision models for high dynamic range and wide colour gamut imaging: techniques andapplications. Academic Press.

[6] Serre, Thomas. "Deep learning: the good, the bad, and the ugly." Annual Review of Vision Science 5 (2019): 399-426.

[7] Marcus, Gary. "Deep learning: A critical appraisal." arXiv preprint arXiv:1801.00631 (2018).

[8] Choi, Charles Q. "7 Revealing Ways AIs Fail: Neural Networks

Download now and start achieving more natural and professional results in minutes. We are excited to announce that our software is now available for free public download. This plugin is compatible with Adobe Premiere for Mac. You can download and use the full version for free for 30 days—no strings attached, no fine print, no annoying pop-ups, and no payment walls. It’s time to start saving valuable time on your color grades! Our goal is to help you produce outstanding professional images with ease.

Select your software of preference and tell us where you want to see TONEO Prime plugin!

We are currently compatible with Adobe Premiere for Mac. If you join our waiting list, we’ll keep you updated on new features and upcoming releases for Adobe Premiere Windows, Final Cut Pro, and DaVinci Resolve. The Windows version is just around the corner, and we’ll announce it soon! We promise not to sell your data to third parties, so don’t worry; we won’t spam your inbox—we hate spam as much as you do. Expect only high-quality communication from us.